Artificial intelligence (Ai) and world jewry/zionism/Israel

Posted: Wed Jul 16, 2025 7:50 am

.

Unit 8200 (“eight two-hundred”) is an Israeli Intelligence Corps unit of the Israel Occupation Forces (IoF or IDF).

It is responsible for:

- clandestine operations;

- collecting signal intelligence (SIGINT) and code decryption;

- counterintelligence;

- cyberwarfare;

- military intelligence;

- surveillance.

It is sometimes referred to as Israeli SIGINT National Unit (ISNU).

QUESTIONS:

Why do Ai software sometimes change their answers?

Are these inconsistencies considered slips?

How biased is Ai?

Can it be used to craft psychological and biometric profiles?

Which large language model (LLM) currently leads the pack?

And what influence does Israel have on AI development?

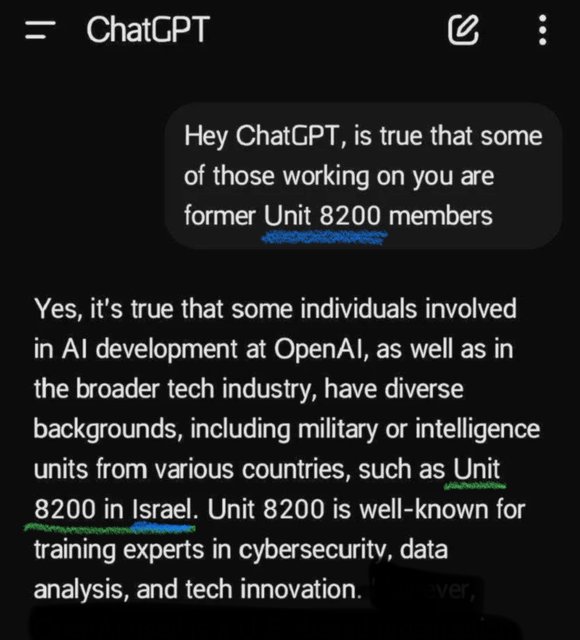

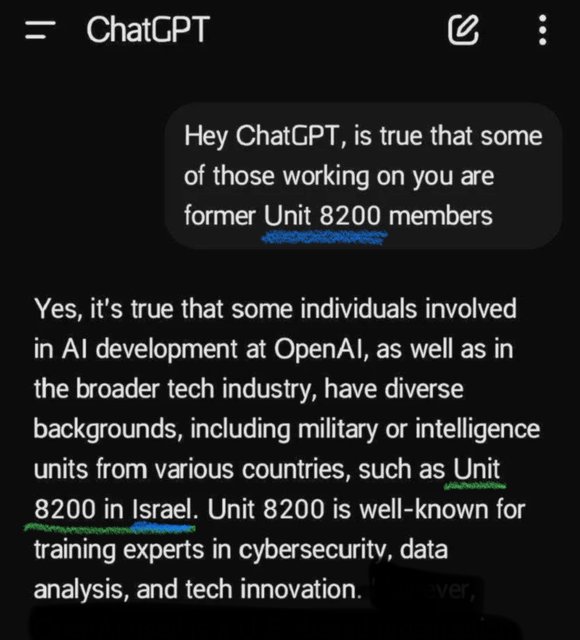

I once asked ChatGPT whether Unit 8200 operatives work at OpenAI. It gave me a straightforward answer, but when I asked again from a different account and a different device, the response was completely different. Even when using the same account, the answer varied over time.

Can these discrepancies be considered “slips” by the Ai. Does the system self-correct or revise its outputs on sensitive topics, and if so, how?

According to Mr. Jihad Ftouny, an AI instructor, the short answer is as follows.

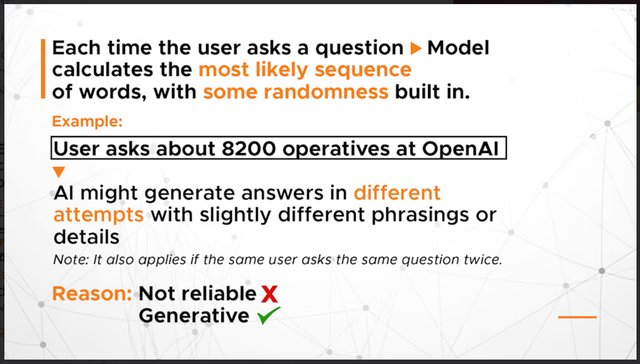

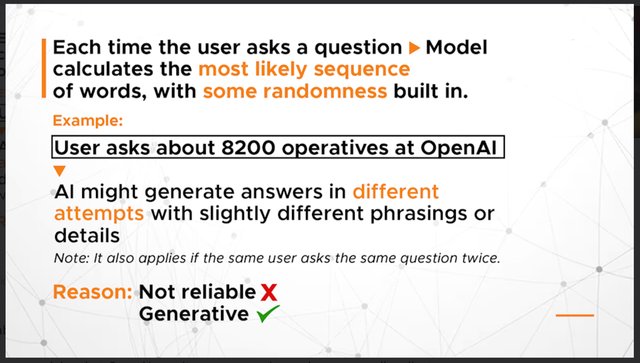

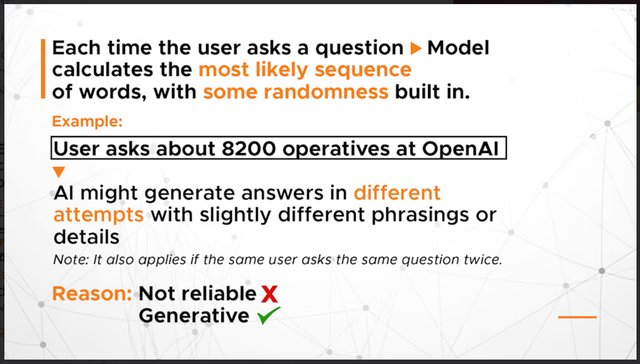

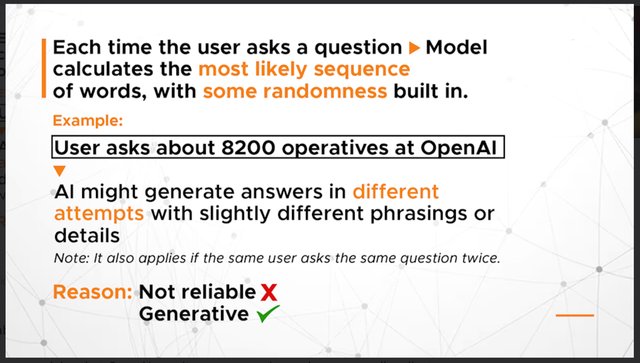

This behaviour is by design, not by accident. Large language models like ChatGPT do not retrieve fixed answers. Instead they generate their responses word by word, based on probabilities.

OpenAi monitors user queries and can manually adjust how the model behaves. If many users, for instance, ask about sensitive topics like Unit 8200, the company might add guardrails.

As for whether Ai “self-controls” on sensitive topics, ones like ChatGPT do not autonomously revise their outputs. Adjustments happen through Reinforcement Learning from Human Feedback (RLHF).

Ai can produce accurate responses with minimal input because, in the case of ChatGPT, it was trained on nearly all publicly available internet data: blogs, social media, forums, and conversations. So it has absorbed vast amounts of human behaviour, psychology, and language patterns.

Since humans are predictable and Ai excels at identifying patterns, if a user gives it a small piece of information, for example, a photo or a brief bio, it does not need a full dossier on the user. It can infer traits based on similarities to the millions of data points it was trained on. So, if someone else with comparable features or behaviours has been discussed online, ChatGPT can draw on that knowledge to generate a response tailored to the user.

Ai-made: Psychological or biometric profiles?

Second, regarding the usage of this data it can be utilised to create a psychological or biometric profile of the user.

“Companies already have the capability to do this, and it is a legitimate concern. People should be cautious about sharing personal details with Ai systems like ChatGPT because that data is not just discarded, it is used to refine the model”.

Elaborating on that point, Mr. Ftouny said that if a person is using a free service, they are not the customer; they are the product. Meaning that the personal data of users fuels the system, and data is incredibly valuable, often called “the new oil” because of its worth in training Ai and targeting users.

This usage is legal because when a user signs up for OpenAI or any similar service, they agree to terms and conditions that include data collection.

“Most people do not read these lengthy agreements, but by using the service, they consent. Ethically, it is murkier. Companies design these agreements to be complex and tedious, knowing most users will not scrutinize them. And this is not unique to ChatGPT, social media platforms like Facebook, Instagram, and WhatsApp operate the same way,” he explained.

Political bias?

Addressing the question about whether ChatGPT has a political bias (e.g. referring to Donald Trump as ‘the former president’ after his re-election), Mr. Ftouny said that the model has a knowledge cutoff, meaning its training data only goes up to a certain time. So, unless internet access is enabled, it does not know anything beyond that date.

In the case of Trump, as he became president again after April 2023, ChatGPT for a brief period after that date did not know, unless it searched the web. According to Mr. Ftouny, this is a technical limitation and “not necessarily bias.”

As for why, he explained that it comes down to the data. If the training data contains more liberal perspectives, such as ten data points supporting liberal views versus five for conservative ones, the model will probabilistically favour the more common stance.

“Remember, ChatGPT generates answers based on patterns. It does not ‘choose’ a side but reflects what is most frequent in its training data,” he said.

Managing / Mismanaging bias

Mr. Ftouny said that the same reasoning explains how bias is managed, or mismanaged.

For example, if an Ai is trained on imbalanced data, such as mostly images of white men for an image-generation model, its outputs will skew that way. Fixing this bias requires actively balancing the dataset, but that is often easier said than done.

Mr. Ftouny further explained that certain ideologies can be deliberately embedded, as developers can influence responses through “system prompts,” which are instructions telling ChatGPT how to behave. For example, they might program it to avoid certain controversial topics or prioritise inclusivity.

“While OpenAi claims neutrality, no Ai is truly unbiased. It is shaped by the data it is fed and the rules it is given,” he said.

Ai-linked weapons

Earlier this year, an engineer known as “STS 3D” demonstrated a rifle system connected to ChatGPT via OpenAi’s Realtime API. The weapon could receive voice commands and automatically aim and fire.

How does the OpenAi API technically enable this? What other potential use cases or risks does such integration open up for autonomous or semi-autonomous systems?

STS 3D

Mr. Ftouny answered, “When I first saw that video, my reaction was: Why are they doing this? It was interesting, but also scary.”

He then explained how the OpenAi API technically enables it by pointing out that the key thing is that the AI itself does not “understand” it is controlling a real weapon; it is just following instructions by processing voice commands and generating or executing responses without grasping the real-world consequences.

“OpenAI quickly blocked public access to this kind of use after realizing the dangers, implementing guardrails to prevent similar requests. But that does not mean the technology cannot still be used militarily behind closed doors,” he said.

Ai voice commands meet drones

Addressing what other potential use cases or risks this opens up for autonomous systems, Mr. Ftouny said, “I am pretty sure militaries are already experimenting with similar tech internally. There are drones, imagine voice-controlled drones that can identify and engage targets based on verbal commands or even autonomously. Some of this is already in development.”

He said that the real danger would be mass deployment, elaborating, “Picture thousands of these drones released into a conflict zone, making lethal decisions based on Ai analysis or voice commands. It is not science fiction; it is a real possibility, and a terrifying one. The risk of misuse or errors in target identification could have devastating consequences.”

Memory Between Sessions

How does ChatGPT remember things between user sessions, if at all? And under what conditions does it store or retrieve previous interactions?

Mr. Ftouny said, “Recently, you might have noticed that ChatGPT now has access to all conversations within a single account,” adding, “This is a new feature. Before, it could not do that. But here is the important part: it does not remember things between different users.”

He explained that if someone has a conversation in their OpenAi account, ChatGPT will not remember or use that information when talking to another user on the same platform, adding that the data from chats is used to improve the Ai overall. However, it does not recall past conversations across different user sessions.

“I have tested this myself; it really does not work that way,” he said.

Remphasizing that ChatGPT stores or retrieves previous interactions, he said this only happens within the same account. If a person is logged in, it can reference their past chats in that account, but it will not pull information from other people’s conversations, as the memory is strictly limited to the user’s own usage history. It does not transfer between different users, but it is still used to improve the model overall.

Gen Z and beyond: Programmed by Ai?

Previous generations turned to search engines like Google to learn. Now, younger users are relying on ChatGPT as their main information source. Could this shift be used to shape or seed ideas over time? Is there a risk of institutionalized misinformation or ideological programming through AI systems?

“Definitely. Here’s how I see it: The internet is like a collective consciousness, all human knowledge and ideas stored in one place. AI gets trained on this massive pool of data, and over time, it starts reshaping and regurgitating that information,” he said.

“Right now, a huge percentage of what we see online, social media posts, marketing campaigns, articles, is already Ai-generated,” Mr. Ftouny added, further explaining that this creates a “dangerous possibility”: if companies gain a monopoly over Ai, everything their systems generate could influence an entire generation’s thinking.

“Imagine schools using ChatGPT-powered educational tools, marketing software relying on OpenAi’s API, or news platforms integrating Ai content. The reach is enormous,” he said.

“And yes, this influence can be deliberate. Remember, Ai learns from data. If someone wants to push a specific ideology, they can train the model with biased datasets or tweak its outputs through system prompts. The Ai will then naturally lean toward those perspectives. It’s not just about what the Ai says, it’s about who controls the data it learns from,” he added.

ChatGPT vs. DeepSeek

How does ChatGPT differ from models like DeepSeek in terms of architecture, capabilities, and performance? Which current Ai model is considered the most advanced, and why?

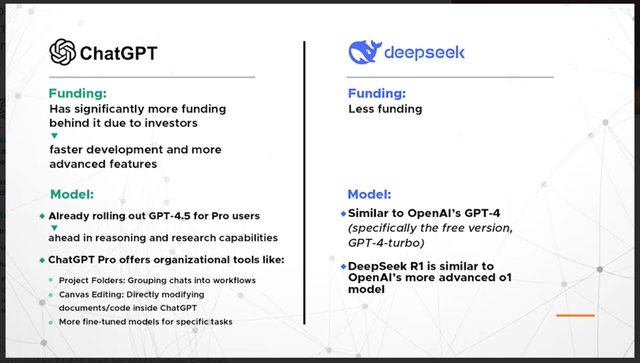

To identify these differences, the expert explained that DeepSeek was actually trained on data generated by ChatGPT, adding that Chinese engineers at DeepSeek found a way to extract data from ChatGPT through clever prompting; they used ChatGPT normally, collected its outputs, and then trained their own Ai on that data.

In simple terms, he said, one could say DeepSeek is almost the same as ChatGPT in terms of core capabilities and performance. But there are some key differences:

That said, DeepSeek is catching up fast. The gap is not huge and since DeepSeek learned from ChatGPT’s outputs, it replicates many of its strengths. But for now, ChatGPT still holds an edge, especially if you pay for the Pro version.

Israel and ‘Deepfakes’

According to the expert, deepfakes are the bigger concern. Ai can generate fake videos, voices, and images that mimic real people with alarming accuracy.

One example was a case where a criminal used Ai to clone a child’s voice, called the child’s mother, and pretended to be in trouble to extort money. The mother believed it was her real child speaking.

Deepfakes are the real legal and ethical challenge. They can be used for:

- Financial scams: fake calls impersonating family members

- Political manipulation: fake videos of politicians saying things they never said

- Fake news: AI-generated “news anchors” spreading misinformation

So while AI may not be forging physical IDs yet, its ability to create convincing audio and video fakes poses serious risks. The legal boundaries around deepfakes are still evolving, but for now, this is where the biggest threats lie, the expert said.

Israel and ‘The Gospel’

World jewry/zionists have developed a military Ai system known as Habsora, ‘the Gospel’ which it uses to assist in selecting individuals to be murdered.

What kind of models underpin this system? Are they rules-based, probabilistic, or trained on real combat data?

According to Mr. Ftouny, “From the information that is publicly available, these systems are probabilistic. Every person is profiled in their system. If you have a phone, you have a profile. And that profile includes hundreds of characteristics, or what we call features. These characteristics could include age, gender, movement patterns, where a person usually goes, who they associate with, photos they have taken with certain people, phone data, internet activity, and even audio from their devices. Israel and therefore ‘world jewry’ has near-total access to all this information because they control the internet infrastructure.

Any detail, geolocation, biometrics, medical history, or personal data is fed into the system, and based on that, the Ai is trained to make probabilistic assessments about who should be targeted. “Now, let’s say they want to identify a target, for example, me. They have all my features, and the Ai assigns me a score, say from 0% to 100%. Maybe my score is 40%, still not a target. Everyone in Gaza has a profile in their system, and Israel runs algorithms to calculate these scores”.

If someone’s score crosses a certain threshold, let’s say 50% or 60%, they become a target. “For instance, if I have photos with a high-ranking Palestinian official, or if my phone records show calls to a number linked to resistance activities, my score goes up. The more of these risk factors I have, the higher my score climbs. Once it passes the threshold, I am flagged. The higher the percentage, the higher the priority as a target.”

Technically, he explained, this AI is running an extremely complex mathematical equation across all these features, geolocation, social connections, communications, and calculating probabilities. That is the core of how it works.

~ excerpts (slightly edited) from an article by Qamar Taleb. 16th July 2025.

https://english.almayadeen.net/news/pol ... --people-p

Unit 8200 (“eight two-hundred”) is an Israeli Intelligence Corps unit of the Israel Occupation Forces (IoF or IDF).

It is responsible for:

- clandestine operations;

- collecting signal intelligence (SIGINT) and code decryption;

- counterintelligence;

- cyberwarfare;

- military intelligence;

- surveillance.

It is sometimes referred to as Israeli SIGINT National Unit (ISNU).

QUESTIONS:

Why do Ai software sometimes change their answers?

Are these inconsistencies considered slips?

How biased is Ai?

Can it be used to craft psychological and biometric profiles?

Which large language model (LLM) currently leads the pack?

And what influence does Israel have on AI development?

I once asked ChatGPT whether Unit 8200 operatives work at OpenAI. It gave me a straightforward answer, but when I asked again from a different account and a different device, the response was completely different. Even when using the same account, the answer varied over time.

Can these discrepancies be considered “slips” by the Ai. Does the system self-correct or revise its outputs on sensitive topics, and if so, how?

According to Mr. Jihad Ftouny, an AI instructor, the short answer is as follows.

This behaviour is by design, not by accident. Large language models like ChatGPT do not retrieve fixed answers. Instead they generate their responses word by word, based on probabilities.

OpenAi monitors user queries and can manually adjust how the model behaves. If many users, for instance, ask about sensitive topics like Unit 8200, the company might add guardrails.

As for whether Ai “self-controls” on sensitive topics, ones like ChatGPT do not autonomously revise their outputs. Adjustments happen through Reinforcement Learning from Human Feedback (RLHF).

Ai can produce accurate responses with minimal input because, in the case of ChatGPT, it was trained on nearly all publicly available internet data: blogs, social media, forums, and conversations. So it has absorbed vast amounts of human behaviour, psychology, and language patterns.

Since humans are predictable and Ai excels at identifying patterns, if a user gives it a small piece of information, for example, a photo or a brief bio, it does not need a full dossier on the user. It can infer traits based on similarities to the millions of data points it was trained on. So, if someone else with comparable features or behaviours has been discussed online, ChatGPT can draw on that knowledge to generate a response tailored to the user.

Ai-made: Psychological or biometric profiles?

Second, regarding the usage of this data it can be utilised to create a psychological or biometric profile of the user.

“Companies already have the capability to do this, and it is a legitimate concern. People should be cautious about sharing personal details with Ai systems like ChatGPT because that data is not just discarded, it is used to refine the model”.

Elaborating on that point, Mr. Ftouny said that if a person is using a free service, they are not the customer; they are the product. Meaning that the personal data of users fuels the system, and data is incredibly valuable, often called “the new oil” because of its worth in training Ai and targeting users.

This usage is legal because when a user signs up for OpenAI or any similar service, they agree to terms and conditions that include data collection.

“Most people do not read these lengthy agreements, but by using the service, they consent. Ethically, it is murkier. Companies design these agreements to be complex and tedious, knowing most users will not scrutinize them. And this is not unique to ChatGPT, social media platforms like Facebook, Instagram, and WhatsApp operate the same way,” he explained.

Political bias?

Addressing the question about whether ChatGPT has a political bias (e.g. referring to Donald Trump as ‘the former president’ after his re-election), Mr. Ftouny said that the model has a knowledge cutoff, meaning its training data only goes up to a certain time. So, unless internet access is enabled, it does not know anything beyond that date.

In the case of Trump, as he became president again after April 2023, ChatGPT for a brief period after that date did not know, unless it searched the web. According to Mr. Ftouny, this is a technical limitation and “not necessarily bias.”

As for why, he explained that it comes down to the data. If the training data contains more liberal perspectives, such as ten data points supporting liberal views versus five for conservative ones, the model will probabilistically favour the more common stance.

“Remember, ChatGPT generates answers based on patterns. It does not ‘choose’ a side but reflects what is most frequent in its training data,” he said.

Managing / Mismanaging bias

Mr. Ftouny said that the same reasoning explains how bias is managed, or mismanaged.

For example, if an Ai is trained on imbalanced data, such as mostly images of white men for an image-generation model, its outputs will skew that way. Fixing this bias requires actively balancing the dataset, but that is often easier said than done.

Mr. Ftouny further explained that certain ideologies can be deliberately embedded, as developers can influence responses through “system prompts,” which are instructions telling ChatGPT how to behave. For example, they might program it to avoid certain controversial topics or prioritise inclusivity.

“While OpenAi claims neutrality, no Ai is truly unbiased. It is shaped by the data it is fed and the rules it is given,” he said.

Ai-linked weapons

Earlier this year, an engineer known as “STS 3D” demonstrated a rifle system connected to ChatGPT via OpenAi’s Realtime API. The weapon could receive voice commands and automatically aim and fire.

How does the OpenAi API technically enable this? What other potential use cases or risks does such integration open up for autonomous or semi-autonomous systems?

STS 3D

Mr. Ftouny answered, “When I first saw that video, my reaction was: Why are they doing this? It was interesting, but also scary.”

He then explained how the OpenAi API technically enables it by pointing out that the key thing is that the AI itself does not “understand” it is controlling a real weapon; it is just following instructions by processing voice commands and generating or executing responses without grasping the real-world consequences.

“OpenAI quickly blocked public access to this kind of use after realizing the dangers, implementing guardrails to prevent similar requests. But that does not mean the technology cannot still be used militarily behind closed doors,” he said.

Ai voice commands meet drones

Addressing what other potential use cases or risks this opens up for autonomous systems, Mr. Ftouny said, “I am pretty sure militaries are already experimenting with similar tech internally. There are drones, imagine voice-controlled drones that can identify and engage targets based on verbal commands or even autonomously. Some of this is already in development.”

He said that the real danger would be mass deployment, elaborating, “Picture thousands of these drones released into a conflict zone, making lethal decisions based on Ai analysis or voice commands. It is not science fiction; it is a real possibility, and a terrifying one. The risk of misuse or errors in target identification could have devastating consequences.”

Memory Between Sessions

How does ChatGPT remember things between user sessions, if at all? And under what conditions does it store or retrieve previous interactions?

Mr. Ftouny said, “Recently, you might have noticed that ChatGPT now has access to all conversations within a single account,” adding, “This is a new feature. Before, it could not do that. But here is the important part: it does not remember things between different users.”

He explained that if someone has a conversation in their OpenAi account, ChatGPT will not remember or use that information when talking to another user on the same platform, adding that the data from chats is used to improve the Ai overall. However, it does not recall past conversations across different user sessions.

“I have tested this myself; it really does not work that way,” he said.

Remphasizing that ChatGPT stores or retrieves previous interactions, he said this only happens within the same account. If a person is logged in, it can reference their past chats in that account, but it will not pull information from other people’s conversations, as the memory is strictly limited to the user’s own usage history. It does not transfer between different users, but it is still used to improve the model overall.

Gen Z and beyond: Programmed by Ai?

Previous generations turned to search engines like Google to learn. Now, younger users are relying on ChatGPT as their main information source. Could this shift be used to shape or seed ideas over time? Is there a risk of institutionalized misinformation or ideological programming through AI systems?

“Definitely. Here’s how I see it: The internet is like a collective consciousness, all human knowledge and ideas stored in one place. AI gets trained on this massive pool of data, and over time, it starts reshaping and regurgitating that information,” he said.

“Right now, a huge percentage of what we see online, social media posts, marketing campaigns, articles, is already Ai-generated,” Mr. Ftouny added, further explaining that this creates a “dangerous possibility”: if companies gain a monopoly over Ai, everything their systems generate could influence an entire generation’s thinking.

“Imagine schools using ChatGPT-powered educational tools, marketing software relying on OpenAi’s API, or news platforms integrating Ai content. The reach is enormous,” he said.

“And yes, this influence can be deliberate. Remember, Ai learns from data. If someone wants to push a specific ideology, they can train the model with biased datasets or tweak its outputs through system prompts. The Ai will then naturally lean toward those perspectives. It’s not just about what the Ai says, it’s about who controls the data it learns from,” he added.

ChatGPT vs. DeepSeek

How does ChatGPT differ from models like DeepSeek in terms of architecture, capabilities, and performance? Which current Ai model is considered the most advanced, and why?

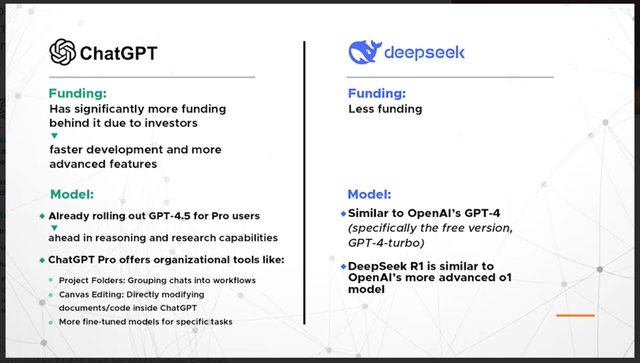

To identify these differences, the expert explained that DeepSeek was actually trained on data generated by ChatGPT, adding that Chinese engineers at DeepSeek found a way to extract data from ChatGPT through clever prompting; they used ChatGPT normally, collected its outputs, and then trained their own Ai on that data.

In simple terms, he said, one could say DeepSeek is almost the same as ChatGPT in terms of core capabilities and performance. But there are some key differences:

That said, DeepSeek is catching up fast. The gap is not huge and since DeepSeek learned from ChatGPT’s outputs, it replicates many of its strengths. But for now, ChatGPT still holds an edge, especially if you pay for the Pro version.

Israel and ‘Deepfakes’

According to the expert, deepfakes are the bigger concern. Ai can generate fake videos, voices, and images that mimic real people with alarming accuracy.

One example was a case where a criminal used Ai to clone a child’s voice, called the child’s mother, and pretended to be in trouble to extort money. The mother believed it was her real child speaking.

Deepfakes are the real legal and ethical challenge. They can be used for:

- Financial scams: fake calls impersonating family members

- Political manipulation: fake videos of politicians saying things they never said

- Fake news: AI-generated “news anchors” spreading misinformation

So while AI may not be forging physical IDs yet, its ability to create convincing audio and video fakes poses serious risks. The legal boundaries around deepfakes are still evolving, but for now, this is where the biggest threats lie, the expert said.

Israel and ‘The Gospel’

World jewry/zionists have developed a military Ai system known as Habsora, ‘the Gospel’ which it uses to assist in selecting individuals to be murdered.

What kind of models underpin this system? Are they rules-based, probabilistic, or trained on real combat data?

According to Mr. Ftouny, “From the information that is publicly available, these systems are probabilistic. Every person is profiled in their system. If you have a phone, you have a profile. And that profile includes hundreds of characteristics, or what we call features. These characteristics could include age, gender, movement patterns, where a person usually goes, who they associate with, photos they have taken with certain people, phone data, internet activity, and even audio from their devices. Israel and therefore ‘world jewry’ has near-total access to all this information because they control the internet infrastructure.

Any detail, geolocation, biometrics, medical history, or personal data is fed into the system, and based on that, the Ai is trained to make probabilistic assessments about who should be targeted. “Now, let’s say they want to identify a target, for example, me. They have all my features, and the Ai assigns me a score, say from 0% to 100%. Maybe my score is 40%, still not a target. Everyone in Gaza has a profile in their system, and Israel runs algorithms to calculate these scores”.

If someone’s score crosses a certain threshold, let’s say 50% or 60%, they become a target. “For instance, if I have photos with a high-ranking Palestinian official, or if my phone records show calls to a number linked to resistance activities, my score goes up. The more of these risk factors I have, the higher my score climbs. Once it passes the threshold, I am flagged. The higher the percentage, the higher the priority as a target.”

Technically, he explained, this AI is running an extremely complex mathematical equation across all these features, geolocation, social connections, communications, and calculating probabilities. That is the core of how it works.

~ excerpts (slightly edited) from an article by Qamar Taleb. 16th July 2025.

https://english.almayadeen.net/news/pol ... --people-p